From Hadoop The Definitive Guide 2nd

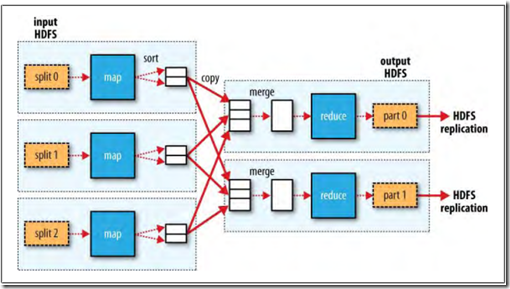

A MapReduce job is a unit of work that the client wants to be performed: it consists of the input data, the MapReduce program, and configuration information. Hadoop runs the job by dividing it into tasks, of which there are two types: map tasks and reduce tasks.

There are two types of nodes that control the job execution process: a jobtracker and a number of tasktrackers. The jobtracker coordinates all the jobs run on the system by

scheduling tasks to run on tasktrackers. Tasktrackers run tasks and send progress reports to the jobtracker, which keeps a record of the overall progress of each job. If a

task fails, the jobtracker can reschedule it on a different tasktracker.

Hadoop split input into small fix-size pieces called input splits, and create one map task for each input split. Thus processing the splits in parallel.

Hadoop does its best to run the map task on a node where the input data resides in HDFS. This is called the data locality optimization. To avoid to transfer the blocks across the network to the node running the map task to save bandwidth.

Map tasks write their output to the local disk, not to HDFS.

Reduce tasks don’t have the advantage of data locality—the input to a single reduce task is normally the output from all mappers.

The map task outputs have to be transferred across the network to the node where the reduce task is running, where they are merged and then passed to the user-defined reduce function

The output of the reduce is normally stored in HDFS for reliability. Each HDFS block of the reduce output, the first replica is stored on the local node, with other replicas being stored on off-rack nodes. Thus, writing the reduce output does consume network bandwidth, but only as much as a normal HDFS write pipeline consumes.

The numbers of reduce task can be specified independently.

When there are multiple reducers, the map tasks partition their output, each creating one partition for each reduce task. There can be many keys (and their associated values)

in each partition, but the records for any given key are all in a single partition. The partitioning can be controlled by a user-defined partitioning function, but normally the

default partitioner—which buckets keys using a hash function—works very well.