前记

几年前在读Google的BigTable论文的时候,当时并没有理解论文里面表达的思想,因而囫囵吞枣,并没有注意到SSTable的概念。再后来开始关注HBase的设计和源码后,开始对BigTable传递的思想慢慢的清晰起来,但是因为事情太多,没有安排出时间重读BigTable的论文。在项目里,我因为自己在学HBase,开始主推HBase,而另一个同事则因为对Cassandra比较感冒,因而他主要关注Cassandra的设计,不过我们两个人偶尔都会讨论一下技术、设计的各种观点和心得,然后他偶然的说了一句:Cassandra和HBase都采用SSTable格式存储,然后我本能的问了一句:什么是SSTable?他并没有回答,可能也不是那么几句能说清楚的,或者他自己也没有尝试的去问过自己这个问题。然而这个问题本身却一直困扰着我,因而趁着现在有一些时间深入学习HBase和Cassandra相关设计的时候先把这个问题弄清楚了。

SSTable的定义

要解释这个术语的真正含义,最好的方法就是从它的出处找答案,所以重新翻开BigTable的论文。在这篇论文中,最初对SSTable是这么描述的(第三页末和第四页初):

简单的非直译:

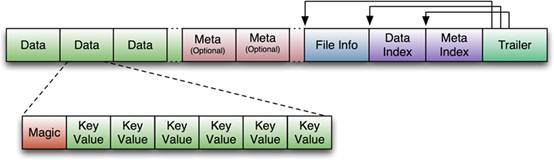

SSTable是Bigtable内部用于数据的文件格式,它的格式为文件本身就是一个排序的、不可变的、持久的Key/Value对Map,其中Key和value都可以是任意的byte字符串。使用Key来查找Value,或通过给定Key范围遍历所有的Key/Value对。每个SSTable包含一系列的Block(一般Block大小为64KB,但是它是可配置的),在SSTable的末尾是Block索引,用于定位Block,这些索引在SSTable打开时被加载到内存中,在查找时首先从内存中的索引二分查找找到Block,然后一次磁盘寻道即可读取到相应的Block。还有一种方案是将这个SSTable加载到内存中,从而在查找和扫描中不需要读取磁盘。这个貌似就是HFile第一个版本的格式么,贴张图感受一下:

在HBase使用过程中,对这个版本的HFile遇到以下一些问题(参考

这里):

1. 解析时内存使用量比较高。

2. Bloom Filter和Block索引会变的很大,而影响启动性能。具体的,Bloom Filter可以增长到100MB每个HFile,而Block索引可以增长到300MB,如果一个HRegionServer中有20个HRegion,则他们分别能增长到2GB和6GB的大小。HRegion需要在打开时,需要加载所有的Block索引到内存中,因而影响启动性能;而在第一次Request时,需要将整个Bloom Filter加载到内存中,再开始查找,因而Bloom Filter太大会影响第一次请求的延迟。

而HFile在版本2中对这些问题做了一些优化,具体会在HFile解析时详细说明。

SSTable作为存储使用

继续BigTable的论文往下走,在5.3 Tablet Serving小节中这样写道(第6页):

第一段和第三段简单描述,非翻译:

在新数据写入时,这个操作首先提交到日志中作为redo纪录,最近的数据存储在内存的排序缓存memtable中;旧的数据存储在一系列的SSTable

中。在recover中,tablet

server从METADATA表中读取metadata,metadata包含了组成Tablet的所有SSTable(纪录了这些SSTable的元

数据信息,如SSTable的位置、StartKey、EndKey等)以及一系列日志中的redo点。Tablet

Server读取SSTable的索引到内存,并replay这些redo点之后的更新来重构memtable。

在读时,完成格式、授权等检查后,读会同时读取SSTable、memtable(HBase中还包含了BlockCache中的数据)并合并他们的结果,由于SSTable和memtable都是字典序排列,因而合并操作可以很高效完成。

SSTable在Compaction过程中的使用

在BigTable论文5.4 Compaction小节中是这样说的:

随着memtable大小增加到一个阀值,这个memtable会被冻住而创建一个新的memtable以供使用,而旧的memtable会转换成一个SSTable而写道GFS中,这个过程叫做minor compaction。这个minor compaction可以减少内存使用量,并可以减少日志大小,因为持久化后的数据可以从日志中删除。在minor compaction过程中,可以继续处理读写请求。

每次minor compaction会生成新的SSTable文件,如果SSTable文件数量增加,则会影响读的性能,因而每次读都需要读取所有SSTable文件,然后合并结果,因而对SSTable文件个数需要有上限,并且时不时的需要在后台做merging compaction,这个merging compaction读取一些SSTable文件和memtable的内容,并将他们合并写入一个新的SSTable中。当这个过程完成后,这些源SSTable和memtable就可以被删除了。

如果一个merging compaction是合并所有SSTable到一个SSTable,则这个过程称做major compaction。一次major compaction会将mark成删除的信息、数据删除,而其他两次compaction则会保留这些信息、数据(mark的形式)。Bigtable会时不时的扫描所有的Tablet,并对它们做major compaction。这个major compaction可以将需要删除的数据真正的删除从而节省空间,并保持系统一致性。SSTable的locality和In Memory

在Bigtable中,它的本地性是由Locality group来定义的,即多个column family可以组合到一个locality group中,在同一个Tablet中,使用单独的SSTable存储这些在同一个locality group的column family。HBase把这个模型简化了,即每个column family在每个HRegion都使用单独的HFile存储,HFile没有locality group的概念,或者一个column family就是一个locality group。在Bigtable中,还可以支持在locality group级别设置是否将所有这个locality group的数据加载到内存中,在HBase中通过column family定义时设置。这个内存加载采用延时加载,主要应用于一些小的column family,并且经常被用到的,从而提升读的性能,因而这样就不需要再从磁盘中读取了。SSTable压缩

Bigtable的压缩是基于locality group级别:

Bigtable的压缩以SSTable中的一个Block为单位,虽然每个Block为压缩单位损失一些空间,但是采用这种方式,我们可以以Block为单位读取、解压、分析,而不是每次以一个“大”的SSTable为单位读取、解压、分析。SSTable的读缓存

为了提升读的性能,Bigtable采用两层缓存机制:

两层缓存分别是:

1. High Level,缓存从SSTable读取的Key/Value对。提升那些倾向重复的读取相同的数据的操作(引用局部性原理)。

2. Low Level,BlockCache,缓存SSTable中的Block。提升那些倾向于读取相近数据的操作。

Bloom Filter

前文有提到Bigtable采用合并读,即需要读取每个SSTable中的相关数据,并合并成一个结果返回,然而每次读都需要读取所有SSTable,自然会耗费性能,因而引入了Bloom Filter,它可以很快速的找到一个RowKey不在某个SSTable中的事实(注:反过来则不成立)。

SSTable设计成Immutable的好处

在SSTable定义中就有提到SSTable是一个Immutable的order map,这个Immutable的设计可以让系统简单很多:

关于Immutable的优点有以下几点:1. 在读SSTable是不需要同步。读写同步只需要在memtable中处理,为了减少memtable的读写竞争,Bigtable将memtable的row设计成copy-on-write,从而读写可以同时进行。2. 永久的移除数据转变为SSTable的Garbage Collect。每个Tablet中的SSTable在METADATA表中有注册,master使用mark-and-sweep算法将SSTable在GC过程中移除。3. 可以让Tablet Split过程变的高效,我们不需要为每个子Tablet创建新的SSTable,而是可以共享父Tablet的SSTable。

posted on 2015-09-25 01:35

DLevin 阅读(15973)

评论(0) 编辑 收藏 所属分类:

HBase