对于hadoop版本不一样,搭建环境也不一样,这里给大家总一下,以免帮助大家更好的学习hadoop.

hadoop 1.2.0测试环境搭建 1:规划

oracle linux6.4上搭建hadoop1.2.0环境

192.168.100.171 linux1 (namenode)

192.168.100.172 linux2 (secondarynamenode)

192.168.100.173 linux3 (datanode)

192.168.100.174 linux4 (datanode)

192.168.100.175 linux5 (预留备用datanode)

2:创建VMware Workstation样板机

a:安装oracle linux 6.4虚拟机linux1,开通ssh服务,屏蔽iptables服务

[root@linux1 ~]# chkconfig sshd on

[root@linux1 ~]# chkconfig iptables off

[root@linux1 ~]# chkconfig ip6tables off

[root@linux1 ~]# chkconfig postfix off

b:关闭虚拟机linux1,增加一个新的硬盘到共享目录作为共享硬盘用(使用SCSI1:0接口),

修改linux1.vmx,添加和修改参数:

disk.locking="FALSE"

diskLib.dataCacheMaxSize = "0"

disk.EnableUUID = "TRUE"

scsi1.present = "TRUE"

scsi1.sharedBus = "Virtual"

scsi1.virtualDev = "lsilogic"

c:重启虚拟机linux1,下载JAVA到共享硬盘,安装JAVA,在环境变量配置文件/etc/profile末尾增加:

JAVA_HOME=/usr/java/jdk1.7.0_21; export JAVA_HOME

JRE_HOME=/usr/java/jdk1.7.0_21/jre; export JRE_HOME

CLASSPATH=.

JAVA_HOME/lib

JAVA_HOME/lib/tools.jar; export CLASSPATH

PATH=$JAVA_HOME/bin

JRE_HOME/bin

PATH; export PATH

d:修改/etc/hosts,增加:

192.168.100.171 linux1

192.168.100.172 linux2

192.168.100.173 linux3

192.168.100.174 linux4

192.168.100.175 linux5

e:修改/etc/sysconfig/selinux

SELINUX=disabled

f:增加hadoop用户及安装hadoop文件:

[root@linux1 ~]# useradd hadoop -g root

[root@linux1 ~]# passwd hadoop

[root@linux1 ~]# cd /

[root@linux1 /]# mkdir /app

[root@linux1 /]# cd /app

[root@linux1 app]# tar -zxf /mnt/mysoft/LinuxSoft/hadoop-1.2.0.tar.gz

[root@linux1 app]# mv hadoop-1.2.0 hadoop120

[root@linux1 app]# chown hadoop:root -R /app/hadoop120

[root@linux1 hadoop120]# su - hadoop

[hadoop@linux1 ~]$ cd /app/hadoop120

[hadoop@linux1 hadoop120]$ mkdir tmp

[hadoop@linux1 hadoop120]$ chmod 777 tmp

g:修改hadoop相关配置文件:

[hadoop@linux1 hadoop120]$ cd conf

[hadoop@linux1 conf]$ vi core-site.xml

******************************************************************************

<configuration>

<name>hadoop.tmp.dir</name>

<value>/app/hadoop120/tmp</value>

</property>

<!-- file system properties -->

<property>

<name>fs.default.name</name>

<value>hdfs://linux1:9000</value>

</property>

</configuration>

******************************************************************************

[hadoop@linux1 conf]$ vi hdfs-site.xml

******************************************************************************

<configuration>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

</configuration>

******************************************************************************

[hadoop@linux1 conf]$ vi mapred-site.xml

******************************************************************************

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>linux1:9001</value>

</property>

</configuration>

******************************************************************************

[hadoop@linux1 conf]$ vi hadoop-env.sh

******************************************************************************

export JAVA_HOME=/usr/java/jdk1.7.0_21

******************************************************************************

h:配置ssh使用证书验证/etc/ssh/sshd_config,打开注释:

RSAAuthentication yes

PubkeyAuthentication yes

AuthorizedKeysFile .ssh/authorized_keys

3:配置ssh

a:关闭样板机,分别复制成linux2、linux3、linux4、linux5:

修改vmware workstation配置文件的displayname;

修改虚拟机的下列文件中相关的信息

/etc/udev/rules.d/70-persistent-net.rules

/etc/sysconfig/network

/etc/sysconfig/network-scripts/ifcfg-eth0

b:启动linux1、linux2、linux3、linux4、linux5,确保相互之间能ping通。

c:配置ssh,确保linux1能无验证访问其他节点

[root@linux1 tmp]# su - hadoop

[hadoop@linux1 ~]$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/hadoop/.ssh/id_rsa):

Created directory '/home/hadoop/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/hadoop/.ssh/id_rsa.

Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub.

The key fingerprint is:

17:37:98:fa:7e:5c:e4:8b:b4:7e:bb:59:28:8f:45:bd hadoop@linux1

The key's randomart image is:

+--[ RSA 2048]----+

| |

| o |

| + o |

| . o ... |

| S . o. .|

| o ..o..|

| .o.+oE.|

| . ==oo |

| .oo.=o |

+-----------------+

[hadoop@linux1 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub hadoop@linux1

[hadoop@linux1 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub hadoop@linux2

[hadoop@linux1 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub hadoop@linux3

[hadoop@linux1 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub hadoop@linux4

[hadoop@linux1 ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub hadoop@linux5

验证可否无密码访问:

[hadoop@linux1 ~]$ ssh linux1 date

[hadoop@linux1 ~]$ ssh linux2 date

[hadoop@linux1 ~]$ ssh linux3 date

[hadoop@linux1 ~]$ ssh linux4 date

[hadoop@linux1 ~]$ ssh linux5 date

4:修改linux的masters和salves配置,然后初始化hadoop

[hadoop@linux1 conf]$ vi masters

192.168.100.171

[hadoop@linux1 conf]$ vi slaves

192.168.100.173

192.168.100.174

[hadoop@linux1 conf]$ cd ..

[hadoop@linux1 hadoop120]$ bin/hadoop namenode -format

5:启动hadoop

[hadoop@linux1 hadoop120]$ bin/start-all.sh

starting namenode, logging to /app/hadoop120/libexec/../logs/hadoop-hadoop-namenode-linux1.out

192.168.100.174: starting datanode, logging to /app/hadoop120/libexec/../logs/hadoop-hadoop-datanode-linux4.out

192.168.100.173: starting datanode, logging to /app/hadoop120/libexec/../logs/hadoop-hadoop-datanode-linux3.out

192.168.100.172: starting secondarynamenode, logging to /app/hadoop120/libexec/../logs/hadoop-hadoop-secondarynamenode-linux2.out

starting jobtracker, logging to /app/hadoop120/libexec/../logs/hadoop-hadoop-jobtracker-linux1.out

192.168.100.174: starting tasktracker, logging to /app/hadoop120/libexec/../logs/hadoop-hadoop-tasktracker-linux4.out

192.168.100.173: starting tasktracker, logging to /app/hadoop120/libexec/../logs/hadoop-hadoop-tasktracker-linux3.out

6:修改linux启动到console状态

hadoop2.2.0测试环境搭建 1:规划centOS6.4上搭建hadoop2.2.0环境,java版本7UP21

192.168.100.171 hadoop1 (namenode)

192.168.100.172 hadoop2 (预留当namenode)

192.168.100.173 hadoop3 (datanode)

192.168.100.174 hadoop4 (datanode)

192.168.100.175 hadoop5 (datanode)

2:创建虚拟机样板机(VM和vitualBOX都可以)a:安装centOS6.4虚拟机hadoop1,开通ssh服务,屏蔽iptables服务

[root@hadoop1 ~]# chkconfig sshd on

[root@hadoop1 ~]# chkconfig iptables off

[root@hadoop1 ~]# chkconfig ip6tables off

[root@hadoop1 ~]# chkconfig postfix off

b:修改/etc/sysconfig/selinux

SELINUX=disabled

c:修改ssh配置/etc/ssh/sshd_config,打开注释:

RSAAuthentication yes

PubkeyAuthentication yes

AuthorizedKeysFile .ssh/authorized_keys

d:修改/etc/hosts,增加:

192.168.100.171 hadoop1

192.168.100.172 hadoop2

192.168.100.173 hadoop3

192.168.100.174 hadoop4

192.168.100.175 hadoop5

e:安装JAVA,在环境变量配置文件/etc/profile末尾增加:

export JAVA_HOME=/usr/java/jdk1.7.0_21

export JRE_HOME=/usr/java/jdk1.7.0_21/jre

export HADOOP_FREFIX=/app/hadoop/hadoop220

export HADOOP_COMMON_HOME=${HADOOP_FREFIX}

export HADOOP_HDFS_HOME=${HADOOP_FREFIX}

export HADOOP_MAPRED_HOME=${HADOOP_FREFIX}

export YARN_HOME=${HADOOP_FREFIX}

export CLASSPATH=.

JAVA_HOME/lib

JAVA_HOME/lib/tools.jar

export PATH=$JAVA_HOME/bin

JRE_HOME/bin

{HADOOP_FREFIX}/bin

{HADOOP_FREFIX}/sbin

PATH

f:增加hadoop组和hadoop用户,并设置hadoop用户密码,然后解压缩安装文件到/app/hadoop/hadoop220,其中将/app/hadoop整个目录赋予hadoop:hadoop,并且在/app/hadoop/hadoop220下建立mydata目录存放数据。

g:修改hadoop相关配置文件:

[hadoop@hadoop1 hadoop205]$ cd etc/hadoop

[hadoop@hadoop1 hadoop]$ vi core-site.xml

******************************************************************************

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://192.168.100.171:8000/</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

</configuration>

******************************************************************************

[hadoop@hadoop1 hadoop]$ vi hdfs-site.xml

******************************************************************************

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/app/hadoop/hadoop220/mydata/name</value>

<description>用逗号隔开的路径相互冗余.</description>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/app/hadoop/hadoop220/mydata/data</value>

</property>

<property>

<name>dfs.blocksize</name>

<value>67108864</value>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.permission</name>

<value>false</value>

</property>

</configuration>

******************************************************************************

[hadoop@hadoop1 hadoop]$ vi yarn-site.xml

******************************************************************************

<configuration>

<property>

<name>yarn.resourcemanager.address</name>

<value>192.168.100.171:8080</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>192.168.100.171:8081</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>192.168.100.171:8082</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

<description>管理员在NodeManager上设置ShuffleHandler service时,要采用“mapreduce_shuffle”,而非之前的“mapreduce.shuffle”作为属性值</description>

</property>

</configuration>

******************************************************************************

[hadoop@hadoop1 hadoop]$ vi mapred-site.xml

******************************************************************************

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.job.tracker</name>

<value>hdfs://192.168.100.171:8001</value>

<final>true</final>

</property>

<property>

<name>mapreduce.map.memory.mb</name>

<value>1536</value>

</property>

<property>

<name>mapreduce.map.java.opts</name>

<value>-Xmx1024M</value>

</property>

<property>

<name>mapreduce.reduce.memory.mb</name>

<value>3072</value>

</property>

<property>

<name>mapreduce.reduce.java.opts</name>

<value>-Xmx2560M</value>

</property>

<property>

<name>mapreduce.task.io.sort.mb</name>

<value>512</value>

</property>

<property>

<name>mapreduce.task.io.sort.factor</name>

<value>100</value>

</property>

<property>

<name>mapreduce.reduce.shuffle.parallelcopies</name>

<value>50</value>

</property>

<property>

<name>mapred.system.dir</name>

<value>file:/app/hadoop/hadoop220/mydata/sysmapred</value>

<final>true</final>

</property>

<property>

<name>mapred.local.dir</name>

<value>file:/app/hadoop/hadoop220/mydata/localmapred</value>

<final>true</final>

</property>

</configuration>

******************************************************************************

[hadoop@hadoop1 hadoop]$ vi hadoop-env.sh

******************************************************************************

export JAVA_HOME=/usr/java/jdk1.7.0_21

export HADOOP_FREFIX=/app/hadoop/hadoop220

export PATH=$PATH:${HADOOP_FREFIX}/bin:${HADOOP_FREFIX}/sbin

export HADOOP_CONF_HOME=${HADOOP_FREFIX}/etc/hadoop

export HADOOP_COMMON_HOME=${HADOOP_FREFIX}

export HADOOP_HDFS_HOME=${HADOOP_FREFIX}

export HADOOP_MAPRED_HOME=${HADOOP_FREFIX}

export YARN_HOME=${HADOOP_FREFIX}

export YARN_CONF_DIR=${HADOOP_FREFIX}/etc/hadoop

******************************************************************************

[hadoop@hadoop1 hadoop]$ vi yarn-env.sh

******************************************************************************

export JAVA_HOME=/usr/java/jdk1.7.0_21

export HADOOP_FREFIX=/app/hadoop/hadoop220

export PATH=$PATH:${HADOOP_FREFIX}/bin:${HADOOP_FREFIX}/sbin

export HADOOP_CONF_HOME=${HADOOP_FREFIX}/etc/hadoop

export HADOOP_COMMON_HOME=${HADOOP_FREFIX}

export HADOOP_HDFS_HOME=${HADOOP_FREFIX}

export HADOOP_MAPRED_HOME=${HADOOP_FREFIX}

export YARN_HOME=${HADOOP_FREFIX}

export YARN_CONF_DIR=${HADOOP_FREFIX}/etc/hadoop

******************************************************************************

3:配置ssha:关闭样板机,分别复制成hadoop2、hadoop3、hadoop4、hadoop5:

修改vmware workstation配置文件的displayname;

修改虚拟机的下列文件中相关的信息

/etc/udev/rules.d/70-persistent-net.rules

/etc/sysconfig/network

/etc/sysconfig/network-scripts/ifcfg-eth0

b:启动hadoop1、hadoop2、hadoop3、hadoop4、hadoop5,确保相互之间能ping通。

c:配置ssh无密码登录

用用户hadoop登录各节点,生成各节点的秘钥对。

[hadoop@hadoop1 ~]$ ssh-keygen -t rsa

[hadoop@hadoop2 ~]$ ssh-keygen -t rsa

[hadoop@hadoop3 ~]$ ssh-keygen -t rsa

[hadoop@hadoop4 ~]$ ssh-keygen -t rsa

[hadoop@hadoop5 ~]$ ssh-keygen -t rsa

切换到hadoop1,进行所有节点公钥的合并

[hadoop@hadoop1 .ssh]$ ssh hadoop1 cat /home/hadoop/.ssh/id_rsa.pub>>authorized_keys

[hadoop@hadoop1 .ssh]$ ssh hadoop2 cat /home/hadoop/.ssh/id_rsa.pub>>authorized_keys

[hadoop@hadoop1 .ssh]$ ssh hadoop3 cat /home/hadoop/.ssh/id_rsa.pub>>authorized_keys

[hadoop@hadoop1 .ssh]$ ssh hadoop4 cat /home/hadoop/.ssh/id_rsa.pub>>authorized_keys

[hadoop@hadoop1 .ssh]$ ssh hadoop5 cat /home/hadoop/.ssh/id_rsa.pub>>authorized_keys

注意修改authorized_keys文件的属性,不然ssh登录的时候还需要密码[hadoop@hadoop1 .ssh]$ chmod 600 authorized_keys

发放公钥到各节点

[hadoop@hadoop1 .ssh]$ scp authorized_keys hadoop@hadoop2:/home/hadoop/.ssh/authorized_keys

[hadoop@hadoop1 .ssh]$ scp authorized_keys hadoop@hadoop3:/home/hadoop/.ssh/authorized_keys

[hadoop@hadoop1 .ssh]$ scp authorized_keys hadoop@hadoop4:/home/hadoop/.ssh/authorized_keys

[hadoop@hadoop1 .ssh]$ scp authorized_keys hadoop@hadoop5:/home/hadoop/.ssh/authorized_keys

确认各节点的无密码访问,在各节点以下命令确保ssh无密码访问

[hadoop@hadoop1 .ssh]$ ssh hadoop1 date

[hadoop@hadoop1 .ssh]$ ssh hadoop2 date

[hadoop@hadoop1 .ssh]$ ssh hadoop3 date

[hadoop@hadoop1 .ssh]$ ssh hadoop4 date

[hadoop@hadoop1 .ssh]$ ssh hadoop5 date

4:初始化hadoop[hadoop@hadoop1 hadoop220]$ hdfs namenode -format

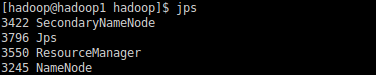

5:启动hadoop[hadoop@hadoop1 hadoop]$ start-dfs.sh

[hadoop@hadoop1 hadoop]$ start-yarn.sh

6:访问地址

NameNode

http://192.168.100.171:50070/

ResourceManager

http://192.168.100.171:8088/

7:测试

上传文件,然后运行wordcount。值得注意的地方是,hadoop2.2不能象hadoop1.x那样在缺省的HDFS目录下进行文件操作,而是要带上hdfs:台头(可以设置成不带台头,但还没找到如何设置)。参见官方说明:All FS shell commands take path URIs as arguments. The URI format is

scheme://authority/path. For HDFS the scheme is

hdfs, and for the Local FS the scheme is

file. The scheme and authority are optional. If not specified, the default scheme specified in the configuration is used. An HDFS file or directory such as /parent/child can be specified as

hdfs://namenodehost/parent/child or simply as

/parent/child (given that your configuration is set to point to

hdfs://namenodehost).

[hadoop@hadoop1 hadoop220]$ hdfs dfs -mkdir hdfs://192.168.100.171:8000/input

[hadoop@hadoop1 hadoop220]$ hdfs dfs -put ./etc/hadoop/slaves hdfs://192.168.100.171:8000/input/slaves

[hadoop@hadoop1 hadoop220]$ hdfs dfs -put ./etc/hadoop/masters hdfs://192.168.100.171:8000/input/masters

[hadoop@hadoop1 hadoop220]$ hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.2.0.jar wordcount hdfs://192.168.100.171:8000/input hdfs://192.168.100.171:8000/output

终于搞定!

该文转载于:

hadoop不同版本环境搭建集合

http://www.aboutyun.com/forum.php?mod=viewthread&tid=5116&fromuid=3243(出处: about云开发)